Ep 1. Introduction to Quantum Computing

Quantum computing is a fascinating and complex subject. This article will serve as an introductory guide, designed to usher beginners into this field.

Understanding Quantum Computing

Quantum computing is an innovative technology that harnesses the principles of quantum mechanics to process information. It differs markedly from classical computing, enabling calculations to be processed in new and powerful ways.

How Quantum Computing Differs from Classical Computing

Classical computing is based on binary digits, or “bits,” that represent either a 0 or a 1. By contrast, quantum computing uses quantum, or “qubits.” A qubit can be both 0 and 1 simultaneously, thanks to the quantum property called superposition. This allows quantum computers to process a high number of possibilities at once.

Additionally, qubits can be entangled due to another quantum property called entanglement. When qubits are entangled, the state of one qubit can instantaneously affect the state of another, no matter how far apart they are. This enhances the computational speed and power of computers significantly.

Key Terms and Concepts in Quantum Computing

In order to fully understand quantum computing, it’s essential to grasp a few key concepts:

-

Qubit: The basic unit of quantum information, which can be both 0 and 1 at the same time due to superposition.

-

Superposition: A quantum state where a system can be in multiple states simultaneously.

-

Entanglement: The phenomenon where the state of one qubit is directly related to the state of another, no matter the distance separating them.

-

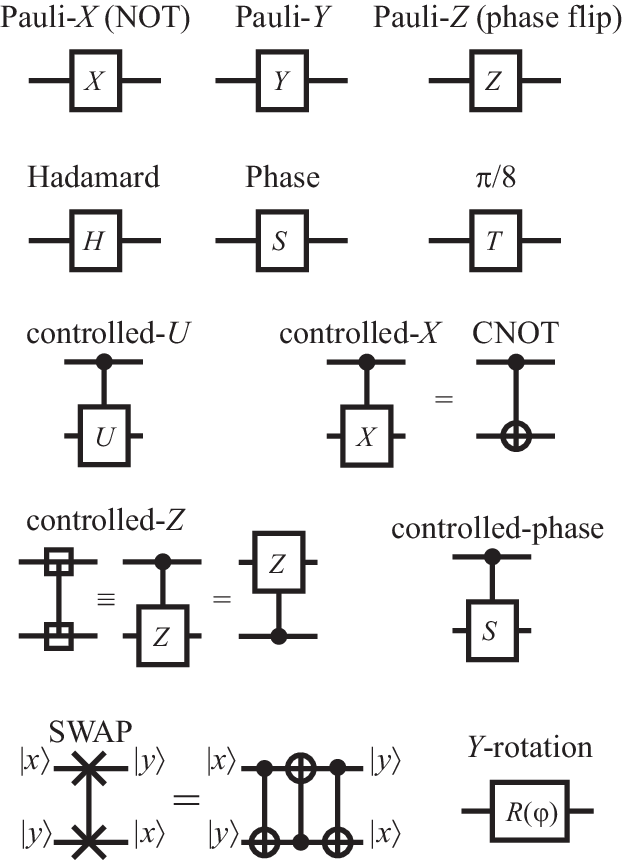

Quantum Gates: Basic quantum circuits that operate on a small number of qubits. They perform the actual computation in a quantum system. Just like logic gates in classical circuits, quantum gates are a primary building block of quantum computing.

- Quantum Circuits: A sequence of quantum gates. They describe quantum computations and are used to manipulate qubits. You can think of them as the quantum version of classical computing circuits.

- Quantum Interference: Another key concept explaining how probability amplitudes can add up to reinforce or cancel each other, creating the effect of interference. This is key for quantum algorithms.

Enjoy Reading This Article?

Here are some more articles you might like to read next: